CLASS 31. Bayesian Methods.

Bayesian Thinking

Holder and Lewis, 2003 (Moodle);

Hitchcock, 2003 (Moodle) [OPTIONAL]

Two schools of thought in statistics: classical (frequentist) and Bayesian.

In Bayesian statistics a parameter is not treated as an unknown constant, but instead each parameter has a probability distribution that describes the parameter's uncertainty. Before the data is analyzed, the distribution of the parameter is called prior distribution, and after the data taken into account, the distribution is called posterior distribution.

Posterior distribution is calculated from prior distribution using Bayes' Theorem:

An Example of Bayes' Theorem use in practice.

Priors

- Expectations from a model

- Past observations of similar situations

- Vague (non-informative or flat) priors

One has to make sure that the model is not over-parameterized, because the posterior might become sensitive to prior distribution.

Markov Chain Monte Carlo

Normalizing constant (see Bayes' theorem above) is difficult to calculate, as it involves complicated summation (high-dimensional integration). In case of phylogenetic analyses, the normalizing constant corresponds to summation over all possible tree topologies and all possible branch lengths in every possible tree, plus over all possible parameter values in substitution model.

Solution:

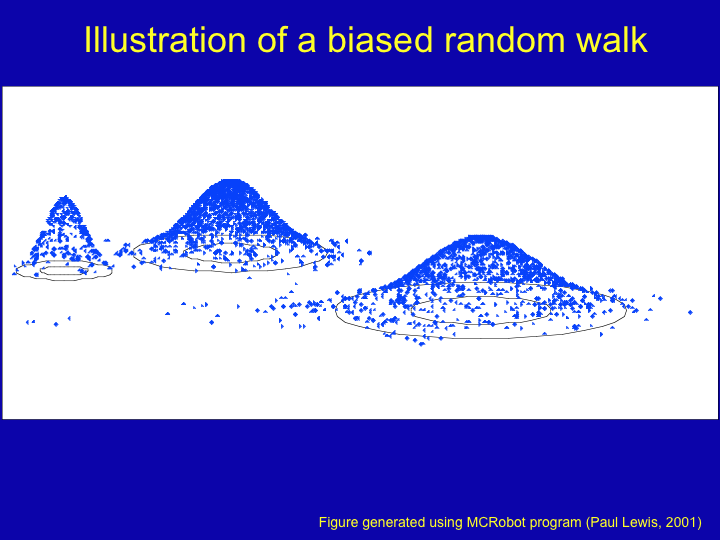

Metropolis-Hastings (1953) MCMC algorithm, which approximates integration through biased sampling:

When landscape contains multiple peaks, MCMC has a hard time to move from one peak to another. The solution is implemented in so-called Metropolis-coupled MCMC (MCMCMC or MC3):

n chains run in parallel, one is "cold" and the rest are "heated". The heated chains would see the landscape flattened (or you can think of peaks being melted).

Bayesian Phylogenetics

Formulation of phylogenetic problem in Bayesian framework (modified from Yang, "Computational Molecular Evolution", 2006):

- Start with a random tree τ, with random branch lengths b and random parameters of substitution model θ.

- Run N iterations (N is very large):

- Propose change to the tree (using tree rearrangements algorithms such as NNI, SPR or TBR)

- Propose changes to branch lengths b

- Propose changes to parameters θ

- Every k iterations, sample the chain: save τ,b,θ to a file.

- At the end, discard first M trees (burn-in) and summarize results.

Trees can be summarized as a majority-rule consensus tree. Each branch on the tree will be associated with posterior probability (a number between 0 and 1). The posterior probability can be easily interpreted as the probability that the branch/clade is correct, given the data, model and the prior.